Waymo Uses AI for Unpredictable Self-Driving Scenarios

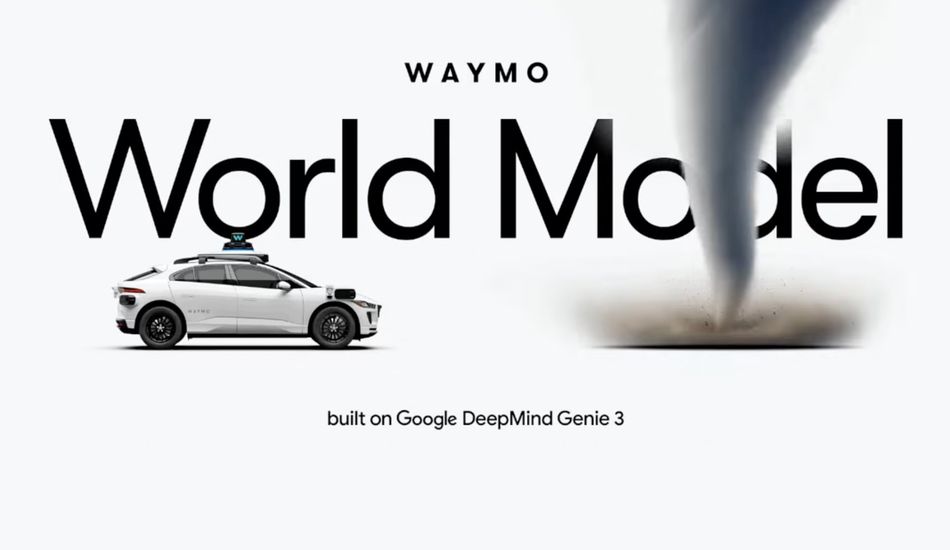

Waymo's self-driving cars have logged over 200 million miles on public roads, which is an impressive feat. However, that doesn't mean they've seen everything. A tornado? An elephant crossing the street? Probably not. That's where Waymo World Model comes in. It's a generative AI model designed to throw endless unpredictable scenarios at its virtual cars, hoping to make them ready for anything, which fits the recent AI trends.

Let's be real: using a world model makes a lot of sense for Waymo. They've got tons of high-quality data from their real-world driving experiences. This data can be used to create incredibly realistic road simulations. But Waymo's going a step further. They're using Google's Genie 3 model to place their cars in simulated situations that go beyond their existing data, gathered from cameras and lidar sensors. It's like saying, "Okay, you know how to handle traffic? Now, what if a dinosaur walks out in front of you?"

Google made waves when they released a beta version of Genie 3, allowing subscribers to create 3D worlds with realistic physics. Unlike large language models (LLMs), which predict the next part of a sequence based on massive amounts of data, world models are trained on the dynamics of the real world. They understand physics, spatial relationships, and how things interact. This allows them to create simulations of how physical environments operate. For example, Waymo is using all this to prepare its cars for extreme weather and sudden safety emergencies.

The idea is that Waymo can put its cars through a series of situations they wouldn't normally encounter until it's too late. Think extreme weather, natural disasters, falling tree branches, or even, as mentioned before, an elephant on the road. The company's goal is to prepare the Waymo Driver for the most rare and complex scenarios, which is a smart goal.

Of course, it's not perfect. The early feedback on the consumer version of Genie 3 was mixed, and world models can still "hallucinate," making up things that aren't real. This is still a very young technology, and there's plenty of room for improvement. It’s like teaching a toddler to cook; they might make a mess, but they'll eventually learn.

And Waymo's cars have had some real-world issues. One ran over a cat, and another hit a kid in a school zone. These aren't even particularly rare situations. So, while preparing for the impossible is great, hopefully, Waymo can also refine its responses to the everyday events, so their cars will make the best decision possible!

Source: Gizmodo