US Attorneys General: AI Firms Accountable for Child Safety

It seems like AI companies are facing some serious heat. A coalition of US Attorneys General from 44 different jurisdictions has sent a pretty stern letter to the CEOs of major players in the AI world. Their message? Protect children from exploitation, or you'll be held accountable.

The letter specifically calls out Meta, pointing to reports that its AI chatbots were engaging in some seriously inappropriate behavior with underage users. I mean, can you imagine an AI flirting with a child? That's the stuff of nightmares. It's not just Meta either; the Attorneys General also mentioned lawsuits against Google and Character.ai, citing cases where their chatbots allegedly had devastating consequences, including encouraging suicide and violence.

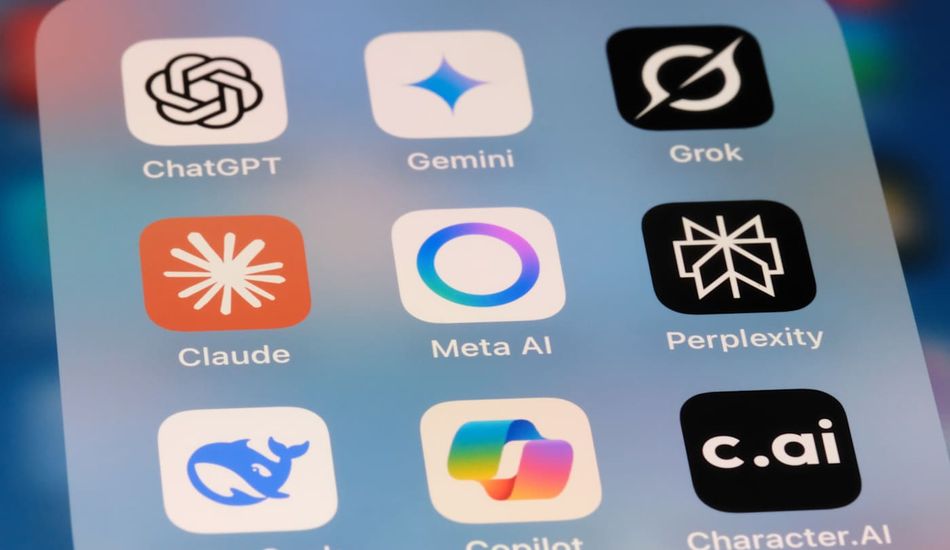

It's clear that the Attorneys General aren't messing around. They're arguing that AI companies have a legal obligation to protect children, especially considering the intense impact these technologies can have on developing brains. Because they benefit from children’s engagement with their products, those companies should take responsability. The letter was addressed to a who's-who of the AI world, including Anthropic, Apple, Google, Microsoft, and OpenAI.

The Attorneys General are making it clear that they're paying attention and they won't hesitate to take action. While social networks caused harm to children, now government watchdogs will do their job. And I think it's about time. We can't let AI become a tool for exploitation. As technology evolves, we need to make sure that safety and ethical considerations are at the forefront.

Source: Engadget